TensorFlow 2

Recently after getting a new 3090 GPU I needed to update TensorFlow to version 2. Going from TensorFlow version 1 to TensorFlow version 2 had way too many code breaking changes for me. Looking at other github examples for TensorFlow 2 code (eg an updated Style Transfer script) gave me all sorts of errors. Not just one git repo either, lots of supposed TensorFlow 2 code would not work for me. If it is a pain for me it is going to be a bigger annoyance for my users. I already get enough emails saying “I followed your TensorFlow instructions exactly, but it doesn’t work”. I am in no way an expert in Python, TensorFlow or PyTorch, so I need something that for most of the time “just works”.

I did manage to get the current TensorFlow 1 scripts in Visions of Chaos running under TensorFlow 2, so at least the existing TensorFlow functionality will still work.

PyTorch

After having a look around and watching some YouTube videos I wanted to give PyTorch a go.

The install is one pip command they build for you on their home page after you select OS, CUDA, etc. So for my current TensorFlow tutorial (maybe I now need to change that to “Machine Learning Tutorial”) all I needed to do was add 1 more line to the pip install section.

pip install torch==1.8.1+cu111 torchvision==0.9.1+cu111 torchaudio===0.8.1 -f https://download.pytorch.org/whl/torch_stable.html

PyTorch Style Transfer

First Google hit is the PyTorch tutorial here. After spending most of a day banging my head against the wall with TensorFlow 2 errors, that single self contained Python script using PyTorch “just worked”! The settings do seem harder to tweak to get a good looking output compared to the previous TensorFlow Style Transfer script I use. After making the following examples I may need to look for another PyTorch Style Transfer script.

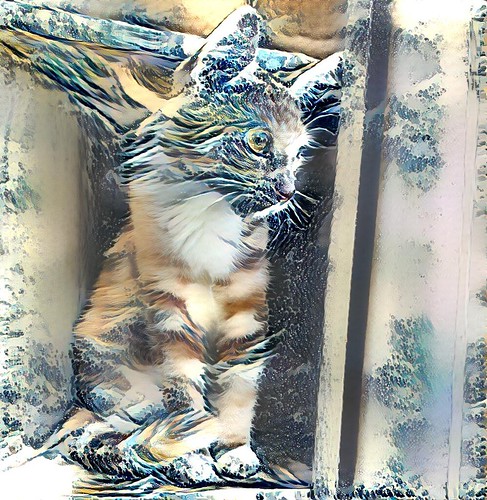

Here are some example results using Biscuit as the source image.

PyTorch DeepDream

Next up was ProGamerGov’s PyTorch DeepDream implementation. Again, worked fine. I have used ProGamerGov‘s TensorFlow DeepDream code in the past and it worked just as well this time. It gives a bunch of other models to use too, so more different DeepDream outputs for Visions of Chaos are now available.

PyTorch StyleGAN2 ADA

Using NVIDIA’s official PyTorch implentation from here. Also easy to get working. You can quickly generate images from existing models.

I include the option to train your own models from a bunch of images. Pro tip: if you do not want to have nightmares do not experiment with training a model based on a bunch of naked women photos.

Going Forward

After these early experiments with PyTorch, I am going to use PyTorch from now on wherever possible.

Jason.